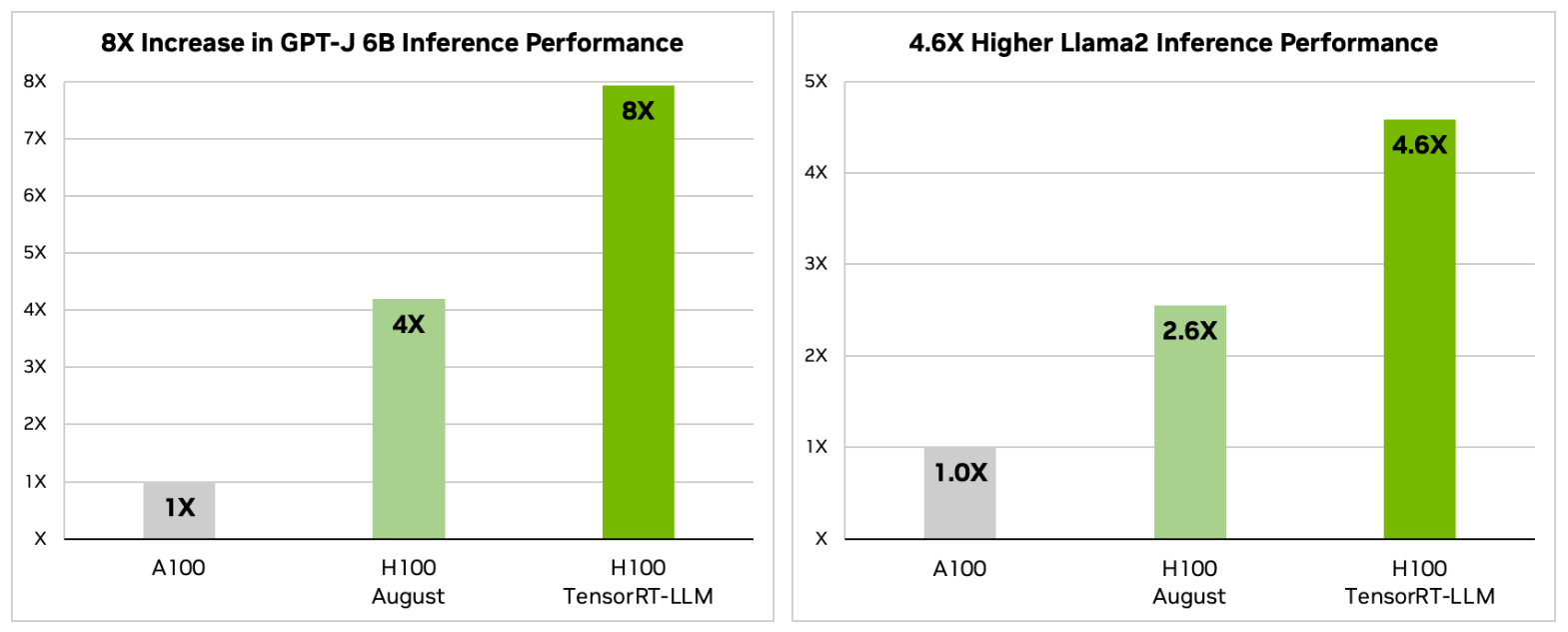

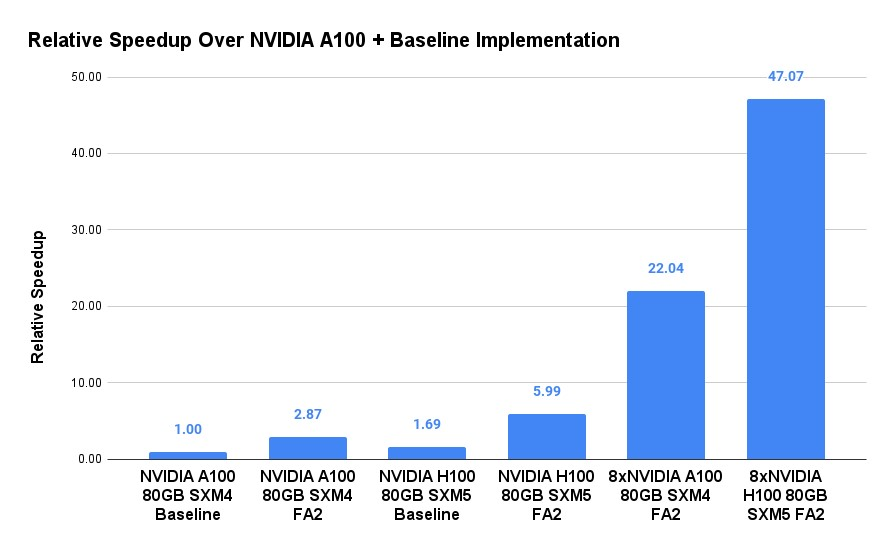

Result According to benchmarks by NVIDIA and independent parties the H100 offers double the computation speed of the A100. What are the key differences between A100 and H100 regarding performance The A100 is designed for high-performance computing HPC. Result According to NVIDIA the H100 performance can be up to 30x better for inference and 9x better for training This comes from higher GPU memory. Result Whether the A100 or H100 is worth purchasing depends on the users specific needs Both GPUs are highly suitable for high-performance. Result Overall H100 provides approximately 6x compute performance improvement over A100 when factoring in all the new compute..

An Order-of-Magnitude Leap for Accelerated Computing. WEB The NVIDIA H100 Tensor Core GPU will be used to power the worlds 10th fastest supercomputer. WEB In a landmark moment for Indias artificial intelligence ambitions Yotta Data Services today announced. WEB The board costs 4745950 36405 which includes a 4313000 base price 32955 a..

The NVIDIA H100 NVL supports double precision FP64 single- precision FP32 half precision FP16 8-bit floating point FP8 and integer INT8 compute tasks The NVIDIA H100 NVL card is a dual-slot 105 inch PCI. An Order-of-Magnitude Leap for Accelerated Computing Tap into unprecedented performance scalability and security for. NVIDIA NVLink is a high-speed point-to-point P2P peer transfer connection Where one GPU can transfer data to and receive data from one other GPU The NVIDIA H100 card supports NVLink bridge connection with a. The H100 NVL is an interesting variant on NVIDIAs H100 PCIe card that in a sign of the times and NVIDIAs extensive success in the AI field is aimed at a singular market. Data SheetNVIDIA H100 Tensor Core GPU Datasheet This datasheet details the performance and product specifications of the NVIDIA H100 Tensor Core GPU It also explains the technological breakthroughs of. The NVIDIA H100 NVL Tensor Core GPU enables an order-of-magnitude leap for large-scale AI and HPC with unprecedented performance scalability and security for every data center and includes the NVIDIA AI. The NVIDIA H100 Tensor Core GPU delivers unprecedented performance scalability and security for every workload With NVIDIA NVLink Switch System up to 256 H100 GPUs can be connected to accelerate exascale. Called the H100 NVL the GPU is a unique edition design based on the regular H100 PCIe version What makes the H100 HVL version so special is the boost in memory capacity now up from 80 GB in the standard. The ThinkSystem NVIDIA H100 PCIe Gen5 GPU delivers unprecedented performance scalability and security for every workload The GPUs use breakthrough innovations in the NVIDIA Hopper. The NVIDIA H100 Tensor Core GPU delivers unprecedented performance scalability and security for every workload With NVIDIA NVLink Switch System up to 256 H100 GPUs can be connected to. H100 NVL checks in at 39 TBs per GPU and a combined 78 TBs versus 2 TBs for the H100 PCIe and 335 TBs on the H100 SXM As this is a dual-card solution with each card occupying a. NVIDIA H100 NVL for Large Language Model Deployment is ideal for deploying massive LLMs like ChatGPT at scale The new H100 NVL with 94GB of memory with Transformer Engine acceleration delivers up to 12x. The NVIDIA H100 NVL is the companys fastest and most efficient H100 series GPU at its launch boasting an updated memory subsystem. . NVIDIA H100 NVL for Large Language Model Deployment is ideal for deploying massive LLMs like ChatGPT at scale The new H100 NVL with 94GB of memory with Transformer Engine. GTC Powering a new era of computing NVIDIA today announced that the NVIDIA Blackwell platform has arrived enabling organizations everywhere to build and run real-time generative AI on trillion. The NVIDIA H100 NVL Tensor Core GPU is optimized for Large Language Model LLM Inferences with its high compute density high memory bandwidth high energy efficiency and unique NVLink architecture. Home NVIDIA L40S is the NVIDIA H100 AI Alternative with a Big Benefit NVIDIA H100 SXM PCIe And NVL Spec Table. A high-level overview of NVIDIA H100 new H100-based DGX DGX SuperPOD and HGX systems and a H100-based Converged Accelerator This is followed by a deep dive into the H100 hardware architecture. 1 terabyte per second of peak bidirectional network bandwidth Dual Intel Xeon Platinum 8480C processors 112 cores total and 2 TB System Memory Powerful CPUs for the most intensive AI jobs. Nvidia h100 pcie 94gb h100 nvl h100l-94c This document and all nvidia design specifications. The NC H100 v5-series virtual machine VM is a cutting-edge addition to the Azure GPU virtual machines family Designed for mid-range AI model training and generative inferencing and HPC simulation workloads. This section provides highlights of the NVIDIA Data Center GPU R 550 Driver version 5505415 Linux and 55178 Windows For changes related to the 550 release of the NVIDIA display driver review the file. On Monday Nvidia unveiled the Blackwell B200 tensor core chipthe companys most powerful single-chip GPU with 208 billion transistorswhich Nvidia claims can reduce AI inference. Microsoft is also announcing the general availability of its Azure NC H100 v5 VM virtual machine VM based on the NVIDIA H100 NVL platform Designed for midrange training and inferencing the NC series of..

For LLMs up to 175 billion parameters the PCIe-based H100 NVL with NVLink bridge utilizes Transformer Engine NVLink and 188GB HBM3 memory to. Spécifications indiquées pour deux cartes H100 NVL PCIe associées au pont. The NVIDIA H100 card is a dual-slot 105 inch PCI Express Gen5 card based on the NVIDIA Hopper architecture It uses a passive heat sink for cooling which. NVIDIA H100 Accelerator Specification Comparison. This datasheet details the performance and product specifications of the NVIDIA H100 Tensor Core GPU It also explains the technological breakthroughs of the..

Comments